The conversation around the impact of AI is all-encompassing, but one aspect that is coming under increasing scrutiny is the vast amount of energy required by data centres as AI adoption continues apace.

In a report released this year, the International Energy Agency estimated that by 2026, the growth of the AI industry will see it consume more than 10 times as much electricity as it did in 2023. The report offered an easily digestible current comparison: on average, a Google search uses 0.3 watt-hours of electricity, while a typical ChatGPT request uses nearly 10 times as much.

This appears to fly in the face of the telecoms sector’s perennial push towards greater sustainability, and indeed major tech firms have conceded that the race to innovate in the AI space is an obstacle to meeting their environmental targets. Google recently cited data centre energy consumption as the key driver for a 48% surge in its greenhouse gas emissions over the past five years, which suggests that environmental concerns are losing out amidst the pressure to lead the way on AI.

After the Gold Rush

Given that tech firms have acknowledged the issue of escalating energy consumption while continuing to invest heavily in AI, it’s easy to reach the conclusion that the environmental impact of AI is a secondary concern among companies looking to survive the AI gold rush. However, framing this question by weighing up the existential concerns of the planet versus those of AI investors is an oversimplification – and one that fails to acknowledge that the latter might help with the former.

Analysys Mason Research Director Simon Sherrington argues: “If [tech firms] don't invest in the next set of tools to drive productivity in the cloud computing world, they will cease to be relevant. In terms of financial costs, they're anticipating they'll generate returns on their investment - otherwise they wouldn't do it in terms of energy consumption. If AI doubles the energy usage within data centres, or triples it, or multiplies it by 10, the question is: can AI-enabled networks and digital systems deliver more energy efficiency, or more carbon saving, than they generate? Arguably, they can.”

Sherrington agrees that an AI ‘gold rush’ is currently underway, but notes that model training is more expensive in terms of power than using the same model once it has been trained. This means that the current surge in energy consumption may tail off – and additional factors may contribute to this.

“In the future, it's also possible we'll go through that classic trough of disillusionment - implementation proves a bit more problematic than people expect, so everything slows down a little bit”, says Sherrington. “It's not a simple equation, but I don't think not investing in these solutions is necessarily the answer. It's trying to find ways as societies to make sure that the resources are used for the right and useful things. That might be an issue for policymakers as much as anything else: making sure that we're not using Gen AI for the wrong purposes, and…. that the resources are channelled in the right directions.”

Sherrington argues that AI capacity can be used to analyse complex systems, create efficiencies, and solve problems that would otherwise prove impossible – but the realities of how consumers could use the technology could be a waste of resources and power. However, AI can deliver societal benefits, whether that’s curing or preventing illnesses to remove the costs of ongoing treatment or care, optimising supply chains, improving agricultural yields, or reducing water consumption and biodiversity impact. For this outcome, the argument for investment is clear, even if tech firms cannot leverage control over what AI is ultimately used for.

“The energy consumption of telecoms and data centres, it's a small percentage of the amount of power used worldwide”, says Sherrington. “[AI platforms are] providing the tools, but if their corporate users, the innovators can come up with new technologies that reduce the weight of airplanes, make batteries more environmentally sustainable and more efficient, there are ways that that investment can repay itself to society.”

While the telecoms sector is not the largest global consumer of energy, given its association with innovation it has been keen to lead the way on sustainability initiatives – particularly as running a network inherently requires large amounts of energy. However, the surge in energy usage for AI threatens to undercut the industry’s collective push towards renewables, sustainability, and greater energy efficiency. While AI may well enable new efficiencies in the future, if this requires an upfront period of energy-intensive model training, will it be too little too late?

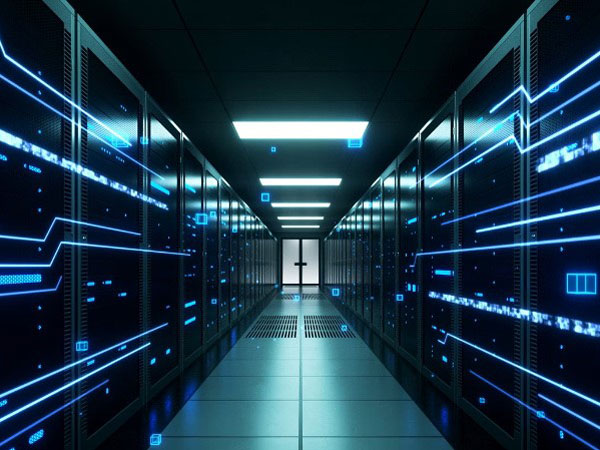

Improving Data Centre Efficiency

Not necessarily, according to Ivan Benitez, Director of Operational Sustainability at co-location provider Equinix. Data centres are the biggest consumers of power with regard to the increased use of AI, and so it will be necessary to improve their energy efficiency as much as possible – preferably while pivoting to renewables. Fortunately, this process is well underway, with Benitez explaining how his company helps its customers scale their businesses while improving operational efficiency to reduce energy consumption.

Benitez highlights that customers aiming for decarbonisation must increase the density of data centres, as this makes them more efficient. “More dense sites means that you don't have to build another data centre if you can still serve more customers on your existing facility”, he notes, adding that sites must be powered using renewable energy to ensure firms can pursue their decarbonisation strategies. This is particularly essential in markets where grid power is unreliable – at one Equinix facility in Johannesburg, Benitez notes that every element of the design is aimed at supporting reliability and efficiency - from using high voltage networks to ensure that there is greater uptime for customers to selecting generators that can cover power outages more effectively – to ensure that the data centre offers a reliable experience for customers.

Renewable energy presents an opportunity to improve grid power in a facility’s location through corporate purchase power agreements (PPAs), which are typically focused on wind and solar power. “Bringing in renewables is public infrastructure - they need the stakeholders of multiple parties, we can't do it alone”, says Benitez. “It needs some things from a permitting perspective, from government's perspective, it takes a lot of different stakeholders to come to the grid to make sure that those opportunities materialize.”

Improving unreliable grids via renewable energy sounds like an ideal solution - but this is only part of the equation, with the energy efficiency of data centres also a major factor given the increased computing demand of AI. Liquid cooling systems enhance energy efficiency by directly removing heat from data centres, reducing the need for traditional air conditioning. Benitez describes this as “the ability to reject the heat that's driven by the more powerful chips”. These systems can lower the overall power consumption of a data centre, leading to significant energy savings, while also allowing for higher computing densities, enabling more servers to operate within the same physical space while maintaining optimal temperatures.

Energy efficiency is measured by PUE (Power Usage Effectiveness) – a scale of the number of units of power used to run customer IT equipment on site, as opposed to using it for cooling or other purposes. A score of 1 is the most desirable, as this would mean 100% of the power in the data centre would go towards running the equipment, whereas a PUE of 3 would mean that two units of power were going towards other elements, highlighting inefficiency.

For customers, achieving this at scale will enable massive improvements in efficiency. By handling processing in one market and storing data elsewhere, companies can grow their AI by leveraging a global network of data centres, enabling them to enter new markets without having to launch a full suite of services. This is ideal for emerging markets – companies can split their offering so that the desired services are closer to the edge, where the market is, while handling the computer load in a more appropriate location, massively increasing efficiency.

Maintaining a holistic strategy towards decarbonisation requires pursuing this economy of scale, reaching new locations and larger sites. This enables greater efficiencies via higher density, covered by renewable energy. Combining these elements will help data centres keep their consumption in check – and thereby improve the energy efficiency of AI.